Beginner's Guide to Learning SQL in 2025

In digital marketing, SQL enables segmentation of customer data at granular levels impossible with spreadsheet tools. For scientific research, SQL provides reproducible methods for querying complex datasets. The language's standardization across platforms means skills transfer seamlessly between PostgreSQL, MySQL, SQL Server, and other implementations, making SQL knowledge exceptionally portable.

SQL and Data Analysis

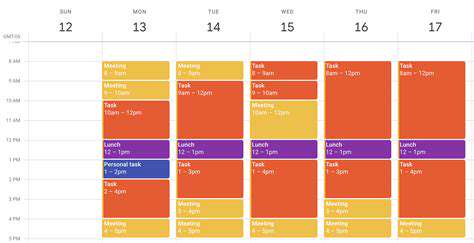

Modern data analysis increasingly happens where the data lives - in databases. SQL transforms analysts from passive reporters into active investigators, allowing immediate follow-up questions without waiting for new data extracts. This interactive analysis cycle - query, review, refine - accelerates insight generation dramatically compared to static reporting.

Advanced analytical functions like window operations (OVER, PARTITION BY) enable sophisticated calculations directly in the database. Time-series analysis, cohort tracking, and sessionization all become possible without extracting data to external tools. SQL's growing support for statistical functions further blurs the line between database and analytical tool.

Mastering Data Manipulation with SQL

Data quality issues consume most analysts' time, but SQL provides powerful tools for addressing them systematically. Transaction control (BEGIN, COMMIT, ROLLBACK) ensures data modifications occur atomically, while constraints maintain referential integrity automatically. Well-designed SQL databases don't just store information - they actively prevent logical inconsistencies.

Modern SQL extensions like MERGE (upsert) operations and Common Table Expressions (CTEs) simplify complex data transformations. Temporal tables automatically track historical changes, while generated columns maintain derived values. These features demonstrate SQL's evolution from simple query language to comprehensive data management system.

Career Opportunities in SQL

The job market tells a clear story: SQL proficiency consistently ranks among the top three skills in data job postings, often appearing more frequently than specific programming languages. Unlike tool-specific skills that become obsolete, SQL knowledge compounds over a career. Even non-technical roles like product managers and marketing analysts now list SQL as a preferred qualification.

Specialized SQL roles like database tuning experts command premium salaries by optimizing query performance at scale. As data volumes grow exponentially, the ability to write efficient SQL becomes increasingly valuable. Cloud database platforms have further expanded opportunities by making powerful SQL capabilities accessible to organizations of all sizes.

Essential SQL Concepts: Setting the Foundation

Understanding the Structure of SQL Databases

Relational databases mirror how humans naturally organize information - into categorized, related tables rather than monolithic lists. This structure, formalized as normalization, minimizes redundancy while maintaining data integrity. A well-designed schema acts as a force multiplier, making common queries intuitive and complex queries possible.

Modern database systems extend this foundation with schemaless JSON columns, spatial data types, and full-text search capabilities - all queryable through SQL. Understanding how to blend relational and non-relational approaches within a single database provides tremendous flexibility in modeling real-world data.

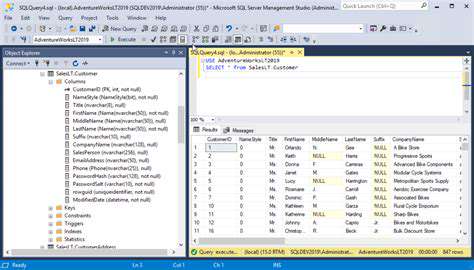

Basic SQL Commands for Data Retrieval

The SELECT statement's apparent simplicity belies its depth. Beyond basic column selection, modern SQL offers pattern matching (LIKE, SIMILAR TO), set operations (UNION, INTERSECT), and sophisticated filtering (CASE expressions in WHERE clauses). Mastering these nuances transforms queries from functional to elegant.

Query readability matters as much as functionality. Thoughtful column aliasing, consistent indentation, and strategic CTE use make complex queries maintainable. These habits become critical when queries evolve into production reports or data pipelines.

Data Manipulation: INSERT, UPDATE, and DELETE

Batch operations demonstrate SQL's efficiency - a single well-constructed UPDATE can modify millions of rows consistently. The RETURNING clause (in PostgreSQL and others) provides immediate feedback about affected rows, bridging modification and verification steps. These capabilities make SQL far more powerful than row-by-row processing in application code.

Understanding isolation levels (READ COMMITTED, SERIALIZABLE) prevents subtle bugs in concurrent environments. Bulk loading techniques (COPY, BULK INSERT) offer orders-of-magnitude performance gains for data ingestion. These practical skills separate academic SQL knowledge from production-ready expertise.

Understanding SQL Joins and Relationships

Joins represent the relational model's superpower - the ability to combine related data dynamically. Beyond basic inner joins, understanding anti-joins (NOT EXISTS) and self-joins unlocks solutions to complex business questions. Lateral joins (FROM LATERAL) enable sophisticated row-by-row processing while maintaining set-based efficiency.

The choice between explicit joins (JOIN syntax) and implicit joins (WHERE clause joins) affects both performance and readability. Modern optimizers handle both well, but explicit joins better convey query intent to human readers - an important consideration for maintainability.

Implementing Data Constraints and Integrity

Constraints act as the database's immune system, preventing invalid data from corrupting the system. CHECK constraints can enforce business rules (e.g., discount_price <= retail_price), while exclusion constraints prevent overlapping ranges in scheduling systems. These declarative rules often prove more reliable than application-level validation.

Deferred constraints (DEFERRABLE INITIALLY DEFERRED) solve chicken-and-egg problems in complex data loads. Conditional unique constraints (WHERE clauses in unique indexes) support sophisticated business rules. These advanced features demonstrate SQL's maturity as a data modeling language.

Introduction to Indexing and Query Optimization

Index strategy requires balancing read speed against write overhead. Partial indexes (WHERE clauses) and expression indexes (on computed values) provide targeted performance benefits. Covering indexes (INCLUDE columns) can eliminate entire query steps by satisfying requests from the index alone.

Understanding execution plans reveals the optimizer's decision-making. Sequential scans aren't inherently bad - for small tables or full-table operations, they're optimal. The art lies in recognizing when an index would help and choosing the right type (B-tree, hash, GiST, etc.) for the access pattern.